.NET nanoFramework, just like the full .NET framework, has multithreading capability out of the box.

Because .NET nanoFramework (usually) runs on single core processor there is no real parallel execution of multiple threads. There is a scheduler that allots a 20ms time slice to each thread in a round and robin fashion. If a thread is capable of running (meaning that is not blocked for any reason) it will execute for 20ms, after which the execution will resume on to the next “ready to run” thread and so on.

By default, a .NET program is started with a single thread, often called the primary thread. However, it can create additional threads to execute code in parallel or concurrently with the primary thread. These threads are often called worker threads.

Why use multiple threads?

Unlike a desktop or server application that can take advantage of running on a multiprocessor or multi-core system, .NET nanoFramework threads can be a very useful programming technique when you are dealing with several tasks that benefit from simultaneous execution or that need input from others. For example: waiting for a sensor to be read to perform a computation and decide on the action to take. Another example would be interacting with a device that requires pooling data constantly. Or a web service that is serving requests, there’s another typical example of multithreading use case.

How to use multithreading

You create a new thread by creating a new instance of the System.Threading.Thread class. There are a few ways of doing this.

Creating a thread object and passing in the instance method using a delegate.

(note that the instance method can be either a static method or a method in a class already instantiated)

Thread instanceCaller = new Thread(

new ThreadStart(serverObject.InstanceMethod));

After this the thread can be started with like this:

instanceCaller.Start();

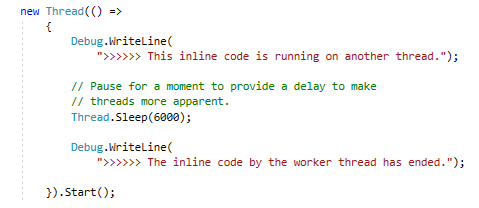

Another (and very cool!) way is to create a thread object using a lambda expression.

(note that this does not allow retaining a reference to the thread and the thread is started immediately.

new Thread(() =>

{

Debug.WriteLine(

">>>>>> This inline code is running on another thread.");

// Pause for a moment to provide a delay to make

// threads more apparent.

Thread.Sleep(6000);

Debug.WriteLine(

">>>>>> The inline code by the worker thread has ended.");

}).Start();

Passing parameters

Occasionally it is required to pass parameters to a working thread. The simplest way of doing this is using a class to hold the parameters and have the thread method get the parameters from the class.

Something like this:

// Supply the state information required by the task.

ThreadWithState tws = new ThreadWithState(

"This report displays the number", 42);

// Create a thread to execute the task, and then...

Thread t = new Thread(new ThreadStart(tws.ThreadProc));

// ...start the thread

t.Start();

Retrieving data from threads

Another common situation is the requirement of accessing data that results from a thread execution. Like a sensor reading that arrives upon a pooled access to a bus.

In this case setting up a call-back that gets executed by the thread before leaving it’s execution it’s a very convenient way of dealing with this.

// Supply the state information required by the task.

ThreadWithState tws = new ThreadWithState(

"This report displays the number",

42,

new ExampleCallback(ResultCallback)

);

Thread t = new Thread(new ThreadStart(tws.ThreadProc));

t.Start();

Controlling threads execution

Threads execution can be controlled by means of the thread API that allows Starting, Suspending and Aborting a thread’s execution.

// create and start a thread

var sleepingThread1 = new Thread(RunIndefinitely);

sleepingThread1.Start();

Thread.Sleep(2000);

// suspend 1st thread

sleepingThread1.Suspend();

Thread.Sleep(1000);

// create and start 2nd thread

var sleepingThread2 = new Thread(RunIndefinitely);

sleepingThread2.Start();

Thread.Sleep(2000);

// abort 2nd thread

sleepingThread2.Abort();

// abort 1st thread

sleepingThread1.Abort();

Threads synchronization

A common scenario is to require a thread to wait for an external event before executing a block of code. To help these use cases there are two classes that block a thread execution until they are signalled elsewhere. ManualResetEvent requires that the code resets the event opposed to AutoResetEvent.

// ManualResetEvent is used to block and release threads manually. It // is created in the unsignaled state.

private static ManualResetEvent mre = new ManualResetEvent(false);

private static AutoResetEvent event_1 = new AutoResetEvent(true);

ivate static AutoResetEvent event_2 = new AutoResetEvent(false);

The API of both type of events it’s very flexible and convenient, allowing creating events already set or reset and have wait handlers waiting forever or for a specified timeout.

private static void ThreadProc()

{

Debug.WriteLine(

$"{Thread.CurrentThread.ManagedThreadId} starts and calls mre.WaitOne()");

mre.WaitOne();

Debug.WriteLine($"{Thread.CurrentThread.ManagedThreadId} ends.");

}

Accessing shared resources

In multithread applications another common requirement is to manage access to shared resources, like a communication bus. In these situations, several threads that need to access the resource can only do it one at the time. For this there is the C# lock statement. Using it ensures that the code protected by it can only be accessed by one and only one thread. Any other thread that tries to execute that code block is put on hold until the thread executing it leaves that block.

private readonly object _accessLock = new object();

(…)

lock (_accessLock)

{

if (_operation >= operationValue)

{

_operation -= operationValue;

appliedAmount = operationValue;

}

}

(…)

Conclusion

All this is available out of the box from C# .NET base class library. Extremely easy to use and incredibly powerful. This empowers developers working on embedded systems to design and code multithreading applications with very little effort.

When debugging a multithreading application, developers can take advantage of the debugging capabilities of Visual Studio, examine execution of each thread, set breakpoints, check variables content, etc. All this without any special hardware or debugger configuration.

Samples for all the above scenarios are available in .NET nanoFramework samples repository. Make sure to clone it and use it to explore the API and it’s capabilities.

Have fun with C# .NET nanoFramework!

Posted as Hackster.io project here.

Hi Jose,

Are Tasks supported along with ThreadPool?

I mean, would Task.Run work?

Last one, isn’t 20ms a lot? Seems like too much at first glance.

Thanks for the great work

LikeLike

Hi,

Task.Run won’t work as we don’t have the System.Threading.Tasks namespace implemented. Only partial implementation of System.Threading.Thread.

The 20ms “thread quantum” it’s a “magic” number that’s there for historical reasons. It can easily be adjusted if a particular application needs that. (note that is would require a rebuild of the firmware)

There is a trade-off between decreasing the quantum and how faster a system would react. Because this happens on a loop. On each run there are other tasks and counter updates involved, so it’s not that simple.

LikeLike